Once upon a time, I built a working retro console with a large number of Atari, NES, TurboGrafx 16, Genesis and SNES titles using the Raspberry Pi B+ and it worked great. It performed well except for a few games that you could tell were taxing the hardware just by listening to the choppy audio. When the Pi 2 came out I immediately began porting my custom build to it. Short on time, I optimistically decided I would try a short cut and just try to perform an upgrade using apt. It failed and left me stuck with a snowflake build that wouldn't work on the original system or the new one. Nice work.

With the system broken and other constraints on my time, I let it rest in peace. I had the disk and knew that all of my ROMs and metadata were intact in some form. The upgrade made it so booting would cause a kernel panic. I also couldn't just fetch the metadata because it was not in the XML format readable by the version of EmulationStation bundled by the stock RetroPie project, it was in sqlite since I was using my own custom build. Due to the amount of time I put into scraping multiple games databases, fixing metadata and manually testing the ROMs, the premise of having to do even a fraction of that again depressed me to the point that I didn't want to even look at the dead device. Then, someone that had witnessed the rig in its working condition asked me if they could borrow it for an event they were having - in two days.

I had a suspicion that I could just use the loopback file system support to mount the partitions directly from the image. I just didn't know how to do that from a raw image. Let's cut to the chase:

After mounting the partition, I was able to simply copy over the files from the image directly to the Micro SD card. Lovely, thank you Linux.

Salvaging the rig

With the system broken and other constraints on my time, I let it rest in peace. I had the disk and knew that all of my ROMs and metadata were intact in some form. The upgrade made it so booting would cause a kernel panic. I also couldn't just fetch the metadata because it was not in the XML format readable by the version of EmulationStation bundled by the stock RetroPie project, it was in sqlite since I was using my own custom build. Due to the amount of time I put into scraping multiple games databases, fixing metadata and manually testing the ROMs, the premise of having to do even a fraction of that again depressed me to the point that I didn't want to even look at the dead device. Then, someone that had witnessed the rig in its working condition asked me if they could borrow it for an event they were having - in two days.

Nothing can motivate you quite like a clear objective and an unmovable timeline. Faced with the simple question Can I get this working again in a day?, I got my laptop out and started to map out how I could get things working again. It was simple:

- Install the stock version of RetroPie for the Pi 2's architecture

- Build the custom version of EmulationStation on-device so I can read the game metadata

- Migrate the ROMs and metadata from the original game disk

- Start it up and play games!

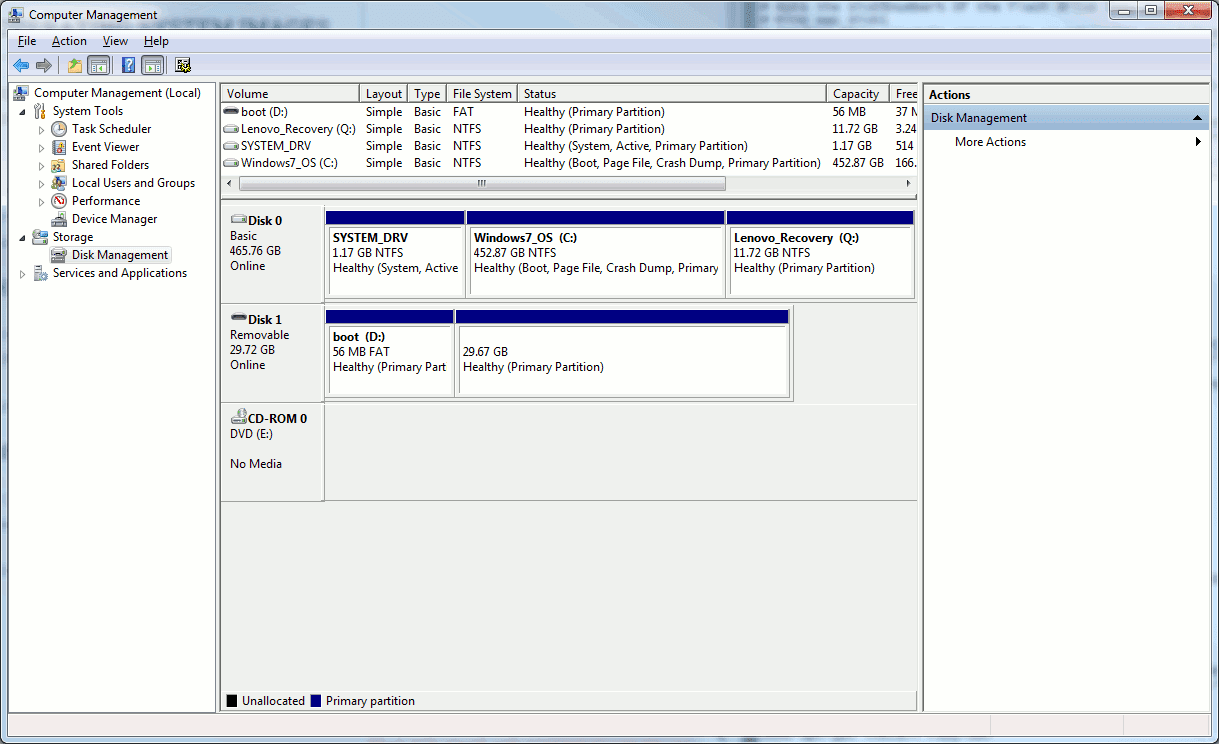

After downloading the RetroPie image for the Pi 2, I saved the contents of the original Micro SD card using dd. After doing that, I wrote the RetroPie image to that same disk, again using dd. I booted the system to confirm that I had a working system on the Pi 2. I then tweaked the RetroPie scripts for building EmulationStation to use my github fork/branch and built it. Now I just had to migrate the ROMs and metadata. The problem is that I didn't have another disk and I dumped the entire image, I didn't just dump the root partition.

Loopback to the rescue!

I had a suspicion that I could just use the loopback file system support to mount the partitions directly from the image. I just didn't know how to do that from a raw image. Let's cut to the chase: